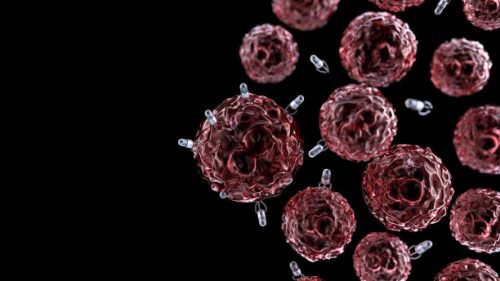

Explainable AI in Nanomedicine: Interpretable Models for Nano–Bio Interactions

Making nanomedicine understandable—one interpretable model at a time.

Skills you will gain:

About Workshop:

This workshop explores the rapidly growing field of Explainable AI (XAI) in nanomedicine, focusing on how interpretable machine-learning models can unravel complex nano–bio interactions, cellular uptake mechanisms, toxicity patterns, and targeting behaviors. Participants will learn how transparency-driven AI approaches—such as SHAP, LIME, rule-based models, and interpretable neural networks—help scientists understand why nanoparticles behave the way they do inside biological systems. Through structured lectures and conceptual demonstrations, the workshop bridges nanotechnology, data science, and biomedical research to empower evidence-driven, safer, and clinically translatable nanocarrier design.

Aim: To train participants in the principles and applications of Explainable AI for understanding nano–bio interactions and guiding nanomedicine development.

Workshop Objectives:

Participants will:

- Understand nano–bio interactions, cellular uptake, biodistribution, and toxicity pathways.

- Learn the fundamentals of Explainable AI (XAI) and interpretable machine learning.

- Explore model transparency tools such as SHAP, LIME, and feature importance analysis.

- Interpret AI predictions related to nanoparticle behavior and biological responses.

- Evaluate XAI frameworks for improving safety, efficacy, and regulatory acceptance of nanomedicine.

What you will learn?

Day 1 — Foundations of Nano–Bio Interactions & Interpretable ML

- Fundamentals of nano–bio interactions

- Protein corona

- Cellular uptake pathways

- Biodistribution & organ targeting

- Toxicity mechanisms

- Need for interpretability in nanomedicine

- Overview of interpretable ML models

- Decision trees

- Logistic regression

- Rule-based classifiers

- Nanoparticle descriptors

Day 2 — Explainable AI Techniques & Interpretation Tools

- Explainable AI frameworks

- SHAP (Shapley values)

- LIME (Local Interpretable Model-Agnostic Explanations)

- Global vs. local explanations

- Feature importance analysis

- Visualizing nanoparticle behavior using XAI

- Conceptual Python walkthrough: interpreting a nanoparticle toxicity model

Day 3 — Applying XAI for Safer & Smarter Nanomedicine

- Using XAI to refine nanoparticle design

- Surface chemistry

- Core material type

- Size & charge

- Ligand density

- Case studies: interpreting nanoparticle biodistribution, uptake, toxicity

- XAI for regulatory science and risk assessment

- Future directions:

- Trustworthy AI

- Transparent nanomedicine pipelines

- Clinically interpretable predictive models

Mentor Profile

Fee Plan

Important Dates

21 Jan 2026 Indian Standard Timing 07:00 PM

21 Jan 2026 to 23 Jan 2026 Indian Standard Timing 08:00 PM

Get an e-Certificate of Participation!

Intended For :

- UG & PG students in Biotechnology, Nanotechnology, Pharmacy, Biomedical Sciences, AI/ML

- PhD scholars focusing on nanomedicine, drug delivery, ML, toxicology, or materials science

- Academicians integrating AI with nanotechnology or biomedical research

- Industry professionals from pharma, biotech, diagnostics, data science, and regulatory domains

Career Supporting Skills

Workshop Outcomes

By the end of the workshop, participants will be able to:

- Explain the role of XAI in scientific decision-making in nanomedicine.

- Apply interpretability concepts to understanding nanoparticle datasets.

- Identify key nanoparticle descriptors influencing biological outcomes.

- Interpret model outputs related to toxicity, uptake, and biodistribution.

- Evaluate how XAI strengthens trust, reproducibility, and clinical translation in nanomedicine.